The son of an Ethiopian chemistry professor who was killed during unrest in the country last year has filed a lawsuit against Meta, Facebook’s parent company, alleging that the social media platform is fueling viral hate and violence, harming people across eastern and southern Africa.

Abrham Meareg Amare claims in the suit that his father, Meareg Amare, a 60-year-old Tigrayan academic, was gunned down outside his home in Bahir Dar, the capital city of Ethiopia’s Amhara region, in November 2021, after a string of hateful messages were posted on Facebook that slandered and doxed the professor, calling for his murder.

The case is a constitutional petition filed in Kenya’s High Court, which has jurisdiction over the issue, as Facebook’s content moderation operation hub for much of east and south Africa is located in Nairobi.

It accuses Facebook’s algorithm of prioritizing dangerous, hateful and inciteful content in pursuit of engagement and advertising profits in Kenya.

“They have suffered human rights violations as a result of the Respondent failing to take down Facebook posts that violated the bill of rights even after making reports to the Respondent,” reads the complaint.

The legal filing alleges that Facebook has failed to invest adequately in content moderation in countries across Africa, Latin America and the Middle East, particularly from its hub in Nairobi.

It also claims that Meta’s failure to deal with these core safety issues has fanned the flames of Ethiopia’s civil war.

In a statement to CNN, Meta did not directly respond to the lawsuit:

“We have strict rules which outline what is and isn’t allowed on Facebook and Instagram. Hate speech and incitement to violence are against these rules and we invest heavily in teams and technology to help us find and remove this content. Our safety and integrity work in Ethiopia is guided by feedback from local civil society organizations and international institutions.”

Meareg said his father was followed home from Bahir Dar University, where he had worked for four years running one of the country’s largest laboratories and shot twice at close range by a group of men.

He said the men were chanting “junta,” echoing a false claim circulating about his father on Facebook that he had been a member of the Tigray People’s Liberation Front (TPLF), which has been locked in a war with the Ethiopian federal government for two years.

Meareg said he had tried desperately to get Facebook to remove some of the posts, which included a photo of his father and his home address, but he says he did not receive a reply until after he was killed.

An investigation into the murder by the Ethiopian Human Rights Commission, included in the filing and seen by CNN, confirmed that Meareg Amare was killed at his residence by armed assailants, but that their identity was unknown.

“If Facebook had just stopped the spread of hate and moderated posts properly, my father would still be alive,” Meareg said in a statement, adding that one of the posts calling for his father’s death was still on the platform.

“I’m taking Facebook to court, so no one ever suffers as my family has again. I’m seeking justice for millions of my fellow Africans hurt by Facebook’s profiteering — and an apology for my father’s murder.”

Meareg is launching the lawsuit with a legal advisor and former Ethiopia researcher at Amnesty International, Fisseha Tekle, and Kenyan human rights group, the Katiba Institute.

The plaintiffs are asking the court to order Meta to demote violent content, increase content moderation staff in Nairobi and create a restitution fund of about $1.6 billion for victims of hate and violence incited on Facebook.

Ethiopia is an ethnically and religiously diverse nation of about 110 million people who speak scores of languages. Its two largest ethnic groups, the Oromo and Amhara, make up more than 60% of the population. The Tigrayans, the third largest, are around 7%.

A Meta spokesperson said the company’s policies and safety work in Ethiopia are guided by feedback from local civil society organizations and international institutions.

“We employ staff with local knowledge and expertise, and continue to develop our capabilities to catch violating content in the most widely spoken languages in the country, including Amharic, Oromo, Somali and Tigrinya,” the spokesperson said in a statement.

According to Meareg’s filing, Meta only has 25 personnel moderating the major languages in Ethiopia. CNN could not independently confirm this number, and Facebook will not reveal exactly how many local language speakers are evaluating content in Ethiopia that has been flagged as possibly violating its standards.

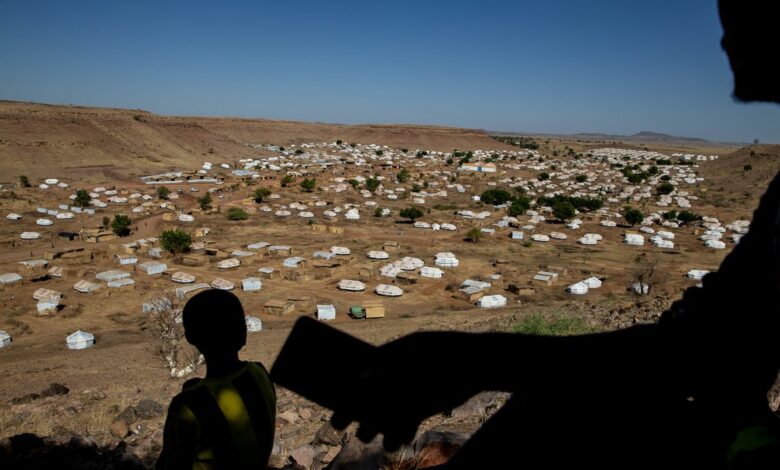

The lawsuit has been filed after two years of grinding conflict in Ethiopia, which has left thousands dead, displaced more than 2 million people and given rise to a wave of atrocities, including massacres, sexual violence and the use of starvation as a weapon of war. A report by the United Nations last year found that all parties to the conflict had “committed violations of international human rights, humanitarian and refugee law, some of which may amount to war crimes and crimes against humanity,” to varying degrees.

The Ethiopian government and the leadership of the TPLF agreed to cease hostilities in November, pledging to disarm fighters, provide unhindered humanitarian access to Tigray and a framework for justice. But the surprise truce has left many questions unanswered, with few details on how it will be implemented and monitored.

It is not the first time Meta has been under scrutiny for its handling of user safety on its platforms, particularly in countries where hate speech online is likely to spill offline and cause harm. Last year, whistleblower Frances Haugen, a former Facebook employee, told the US Senate that the platform’s algorithm was “literally fanning ethnic violence” in Ethiopia.

Internal documents provided to Congress in redacted form by Haugen’s legal counsel, and seen by CNN, revealed that Facebook employees had repeatedly sounded the alarm on the company’s failure to curb the spread of posts inciting violence in “at risk” countries like Ethiopia. The documents also indicated that the company’s moderation efforts were no match for the flood of inflammatory content on its platform and that it had, in many cases, failed to adequately scale up staff or add local language resources to protect people in these places.

Last year, Meta’s independent oversight board recommended the company commission a human rights due diligence assessment into how Facebook and Instagram have been used to spread hate speech and misinformation, which has ratcheted up the risk of violence in Ethiopia.

Rosa Curling, a director at Foxglove, a UK-registered legal nonprofit supporting the case, compared the role that Facebook has played in fanning the flames of the Ethiopian conflict to that of the radio in inciting the Rwandan genocide.

“The consequences of the information on Facebook are so tragic and so horrific,” Curling said. “(Facebook) are failing to take any measures themselves. They’re aware of the problem. They are choosing to prioritize their own profit over the lives of Ethiopians and we’re hoping that this case will prevent that from being allowed to continue.”

Facebook has also been accused of allowing posts to stoke violence in other conflicts, namely in Myanmar, where the UN said the social media giant had promoted violence and hatred against the minority Rohingya population. A lawsuit seeking to hold Meta to account for its role in the Myanmar crisis was filed in a California court last year, by a group of Rohingya refugees, seeking $150 billion in compensation.

The social media company has acknowledged that it didn’t do enough to prevent its platform being used to fuel bloodshed, and Chief Executive Mark Zuckerberg wrote an open letter apologizing to activists and promising to increase its moderation efforts.